A project undertaken during CG Art at Nord university

Step 1: use a good camera to take numerous snapshots of a 360 view of my own head. A software called RealityCapture, owned by Epic Games, was used to render the snapshots into a rough 3D model. This software is often used to make MetaHumans. Afterwards I trimmed the excess noise off the model until I was left with a face.

Step 2: import the rough model into Maya. There's a number of ways to clean up and retopologize the facial model, but I chose to use it to create a MetaHuman.

You can create a MetaHuman based on a 3D face scan, like the one I had. The actual model will then be accessible in Unreal Engine, and then you can export it out of the game and into Maya.

Step 3: Retopologize the face, making sure the "geography" of the facial features follow actual human muscles.

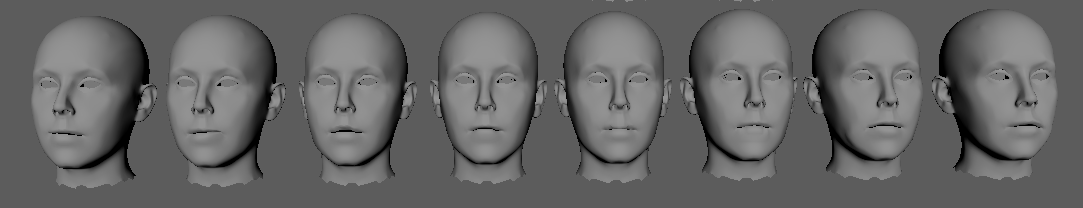

Step 4: Duplicate the model many, many times, and change little things about them. Have one with an eye closed. One with the left corner of the mouth pointing up. One with the mouth pointing down. Over and over and Over.

Step 5: Import into the game engine. In this case I chose Unity. To get a fully fleshed out face that can be animated you have to create the "blendshapes" (different models that the program blends between, like RightEyeOpen -> RightEyeShut) in Maya, then export the model using the game exporter. You can now adjust the blend between shapes within the Unity editor, and save them as keyframes for animation.